Hello everyone,

Before running PEAR for read merging, I used fastqc to visualized the quality of the reads before proceeding with read stitching. After stitching the reads I observed a decrease in the total number of sequence in the stitched reads com[pared to the unstitched read as shown in the pictures. The pear reports revealed that 36.8% reads were discarded. My questions are (1.) why were those reads? is it because they failed to meet the quality score threshold or because they are uncalled bases?. **(2)**Could it be that the discarded reads were responsible for the loss in reads and why is this happening?. Using PEAR, I used the default parameters in merging the forward and reverse reads. I look forward to your marvelous answers

Hello again @madbullahi,

Your read quality looks good on this run! Without more debug details from the program itself I'm not sure what's going on...

Does PEAR tell you why these reads were discarded? If not, it may be worth trying to join with vsearch, as it give you more details about which reads fail to join and why, so you can know what settings would change your results.

Thank you, Colin, I will try VSEARCH as you suggested.

Hello @colinbrislawn

Another question: If you take a look at the Perbase sequence content, the runs fails for this, due to sequence-specific bias. ****(1)What could be the reason for this? is it because of the 16S sequence variation of the bacterial community in the sample been analyzed or due to the presence of overrepresented sequences?. (2) is there a way to correct for this before downstream analysis? Thank you

This is not totally unexpected for amplicons, compared to untargeted transcriptomics, as amplicons are all from the same part of the same gene so conserved regions with the same bases are expected. While these reads may be genuine, this can still cause problems for the Illumina sequencing platform, which is why PhiX is added to the libraries prior to sequencing.

Can you post the results that fastqc shows for perbase sequence content so I can take a look?

Looks like the big spike in uniformity is around base pairs 6 through 9, which could be the padding sequence or the linker before the primer. That's pretty normal for an amplicon.

Are you running this on your joined reads, or your unjoined paired-end reads?

This is for my joined reads @colinbrislawn .

Got it! Yeah, those look pretty typical for amplicons. I do notice that the variance goes does at the end of your first read...

What region are you amplifying in this data set, and how large is it?

The V1 region with an expected amplicon size of about 300-350bp.

I think we spoke about this before... but I want to make sure we are discussing the same thing.

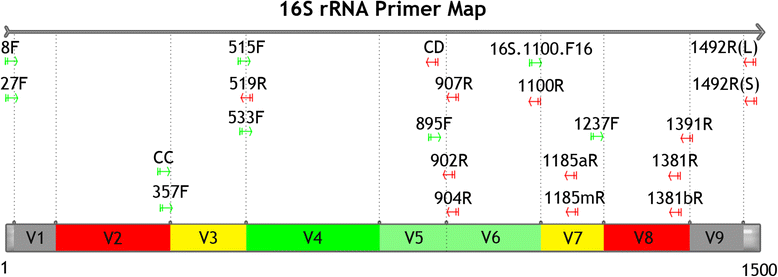

Figure 3 of this paper shows V1 to be pretty small.

Table 1 shows V1 going from 8 to 96 bp in E. Coli, which is less than 90 bp.

How are you calculating the 300-350 as the length of V1? What primers did you use to amplify your target region?

You are right, the V1 region is ~90bp in size. The 27f and 338R that targets the (V1-V2) hypervariable regions was used. V2 is~ 250bp in size hence the calculation 300-350bp

OK, that makes sense to me! Thank you for confirming the region sequenced and the expected length.

What was the length of the Illumina kit used to sequence these? Based on these graphs, I'm guessing 250 paired (500 cycle chemistry), but you can confirm this with the sequencing core.

I ask because this will let us calculate expected overlap, and help explain why some of these 'joined' reads end up being 579 bp long.

We know that PEAR is having trouble joining these reads, resulting in lengths of 500+ bp long, instead of the 300-350bp we expect. This is a common result of the joining algorithm overlapping just the very ends of long reads, instead of overlapping the correct region sequenced.

Thank you for sticking with me while we investigate this. We are making good progress!

demux-joined.qzv (302.6 KB)

Hello @colinbrislawn see attached the merging report from vsearch. there is a difference of about 2million sequence in total compared with the merging using pear.

It was 300bp paired end. 600bp total, V3miseq.

OK! So vsearch is doing a little better, but still not making enough overlap.

Could you re-run with --verbose then attach that log? It should give us more clues about how joining is working. The log will look like this:

Merging reads 100%

199077 Pairs

190464 Merged (95.7%)

8613 Not merged (4.3%)

Pairs that failed merging due to various reasons:

792 too few kmers found on same diagonal

4 potential tandem repeat

1727 too many differences

6080 alignment score too low, or score drop to high

10 staggered read pairs

Statistics of all reads:

250.82 Mean read length

Statistics of merged reads:

454.51 Mean fragment length

13.02 Standard deviation of fragment length

0.62 Mean expected error in forward sequences

0.70 Mean expected error in reverse sequences

0.87 Mean expected error in merged sequences

0.44 Mean observed errors in merged region of forward sequences

0.53 Mean observed errors in merged region of reverse sequences

0.97 Mean observed errors in merged region

(That example is from this post)

Then we can start playing with the vsearch settings to give us the correct overlap. See --p-minovlen in the vsearch join-pairs docs.

Hello @colinbrislawn

I did a quality trimming on the merge reads (pear) of two of my sequencing runs. The fastqc output is attached. Can I go ahead with the next steps for this? do you consider the total sequence for the first run (~50,000 sequence, see first picture) to be within the minimum standard for microbiome analysis?

Good evening,

Yes, that quality looks pretty good. You can continue on to analysis, if you would like. I think 50,000 reads per sample is quite good for depth. 50k reads for a full projects is a little small, but could be OK depending on the expected community diversity and coverage needed.

I would still recommend you try to get joining working with qiime vsearch join-pairs or another plugin within Qiime2. This will mean all your results will have full provenance recorded inside the artefact files, so you can see exactly what is going on. I can also help you optimize your vsearch joining settings, if you would like.

Colin

:qiime2:

Hello @colinbrislawn I would appreciate if you could help with optimizing the Vsearch join parameters. Here is the command I used:

qiime vsearch join-pairs

--i-demultiplexed-seqs 02_analyses/seqs/paired.qza

--o-joined-sequences 02_analyses/demux/demux-joined.qza.

I further did the sequenced quality filter using this command:

qiime quality-filter q-score

--i-demux 01_analyses/demux/demux-joined.qza

--o-filtered-sequences 01_analyses/demux/demux-joined-filtered.qza

--o-filter-stats 01_analyses/demux/demux-joined-filter-stats.qza

But during denoising none of my sequence passed the filter :

QIIME2/2021.11

Plugin error from deblur:

No sequences passed the filter. It is possible the trim_length (400) may exceed the longest sequence, that all of the sequences are artifacts like PhiX or adapter, or that the positive reference used is not representative of the data being denoised.

Debug info has been saved to /scratch/mxa1132_44121654.JdDbtE/qiime2-q2cli-err-jyv_jyls.log

The deblur command used was:

module purge; module load bluebear

module load QIIME2/2021.11

qiime deblur denoise-16S

--i-demultiplexed-seqs 01_analyses/demux/demux-joined-filtered.qza

--p-trim-length 400

--p-sample-stats

--o-representative-sequences 01_analyses/deblur/rep-seqs_deblur_400.qza

--o-table table_deblur_400.qza

--o-stats deblur-stats_400.qza

These reads were joined using Vsearch in qiime2.